Favour Kpokpe: Deep Dive Into the Kaggle Stroke Prediction Dataset

Favour Kpokpe, Pharmacist at Mater Misericordiae Hospital, shared on LinkedIn:

”Stroke remains one of the leading causes of death globally, yet many risk factors are measurable long before an event occurs.

I just published a deep dive into the Kaggle Stroke Prediction dataset, where I applied EDA, imbalance handling with SMOTE, and multiple ML models to evaluate real-world stroke risk prediction.

Exploring:

- Age, hypertension, heart disease, and glucose levels are strong drivers

- Tuned Logistic Regression achieved 84% recall for stroke cases

- Accuracy is misleading in imbalanced medical data

- Probability calibration and bias, especially around gender, remain serious concerns

The model works well for risk ranking, not diagnosis.

If you are working at the intersection of healthcare and machine learning, this analysis highlights the practical trade-offs between recall, precision, calibration, and fairness.

1.0 Introduction

Stroke remains one of the leading causes of death worldwide, responsible for approximately 11% of total deaths..

In an era where data science increasingly intersects with healthcare, predictive modeling offers a promising pathway to support early diagnosis and prevention.

In this article, I explore the Kaggle-hosted Stroke Prediction Dataset by Fede Soriano, which aggregates demographic, lifestyle, and medical features to assess stroke risk.

Through a structured exploratory data analysis (EDA) and comparative machine-learning modeling,

I aim to uncover the key risk factors, evaluate model performance, and surface important learnings around bias, calibration, and real-world applicability.

2.0 Dataset Overview

The Stroke Prediction Dataset (Fede Soriano, Kaggle) contains 5,110 patient records, each described by 11 features that capture demographic, lifestyle, and medical risk factors related to stroke.

Features:

- Demographics: gender, age, ever_married, work_type, Residence_type

- Medical History: hypertension, heart_disease

- Health Indicators: avg_glucose_level, bmi, smoking_status

- Target Variable: stroke, 1 if the patient has had a stroke, 0 otherwise

3.0 Exploratory Data Analysis

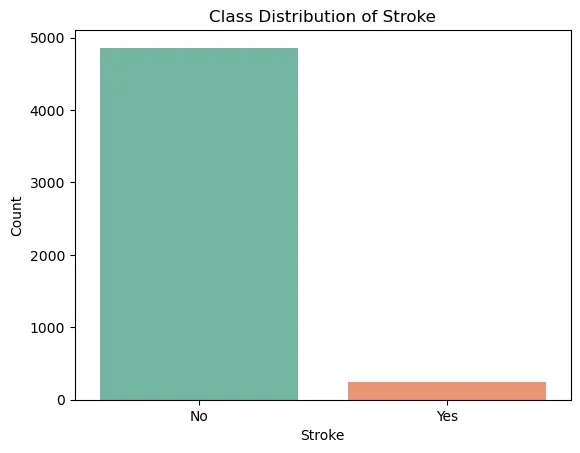

3.1 Class Distribution of the Target Variable

The dataset is highly imbalanced; only about 4.9% of patients experienced a stroke.

This imbalance poses a key challenge for model training and evaluation, as traditional accuracy metrics may not fully reflect real predictive power.

3.2 Distribution of the Continuous Features (bmi, and avg_glucose_level)

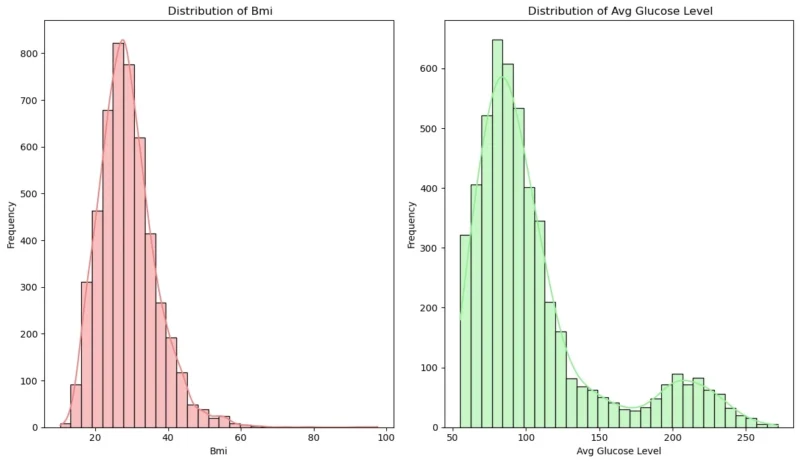

3.2.0 Hisplot

Distribution of BMI

- The BMI distribution shows a classic right-skewed normal distribution

- The peak is around 25-30 BMI, which falls in the overweight range

- Most values cluster between 20-40, with relatively few extreme values

- Very few individuals have BMI below 15 or above 50

Distribution of Average Glucose Level

- This shows a strongly right-skewed distribution with a long tail

- The peak is around 80-100 mg/dL, which is in the normal fasting glucose range

- There’s a substantial right tail extending to 250+ mg/dL, indicating the presence of individuals with elevated glucose levels (pre-diabetic or diabetic range)

- The second smaller cluster around 200+ suggests there may be a subpopulation with diabetes or glucose regulation issues

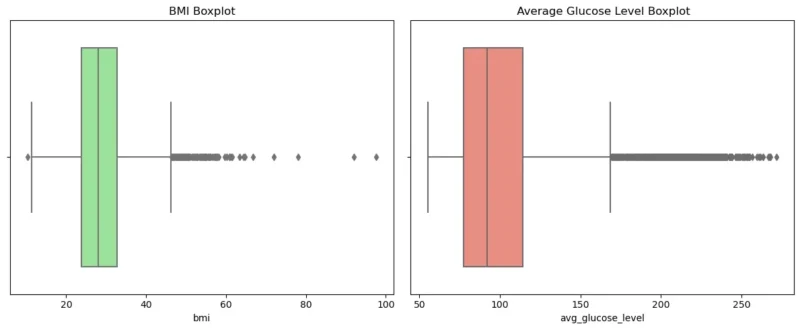

3.2.1 Boxplot

From the boxplots above, it could be observed that there are multiple outliers in both BMI and average glucose level..

BMI: Median falls around 28. Several high outliers beyond 40, with extreme values up to ~97. Right-skewed distribution.

Avg Glucose Level: Median is around 100. outliers above ~150, extending beyond 250. Also Right-skewed.

Both features contain numerical outliers and skewed distributions, This suggests the need for scaling or transformation, and possibly outlier handling before modeling.

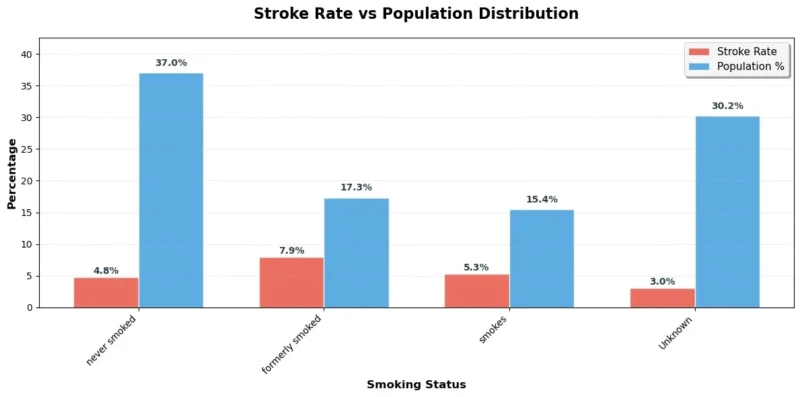

3.3 Relationship Between Stroke Rate and Population Distribution

Interpretation: Stroke Rate vs Population Distribution by Smoking Status

Formerly smoked group has the highest stroke rate (7.9%), despite being only 17.3% of the population.

Current smokers (5.3%) and never smoked (4.8%) have similar stroke rates, though never smokers represent the largest group (37%).

The Unknown group has the lowest stroke rate (3%), but a large population share (30.2%) — may reflect data quality issues or unreported habits.

Stroke risk appears highest among ex-smokers, possibly due to cumulative smoking damage.

This suggests that past smoking history is still a strong risk factor, and underscores the need for early cessation.

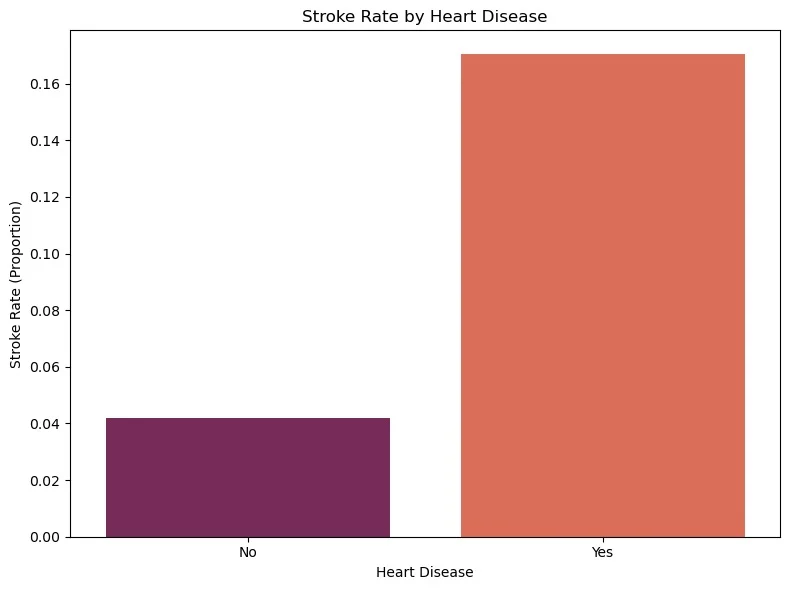

3.4 Stroke Rate by Heart Disease

From the Barplot

People with heart disease have a significantly higher stroke rate (~17%) compared to those without heart disease (~4.1%).

From the dataset, people with heart disease have an approximately 314% higher relative stroke risk compared to those without heart disease.

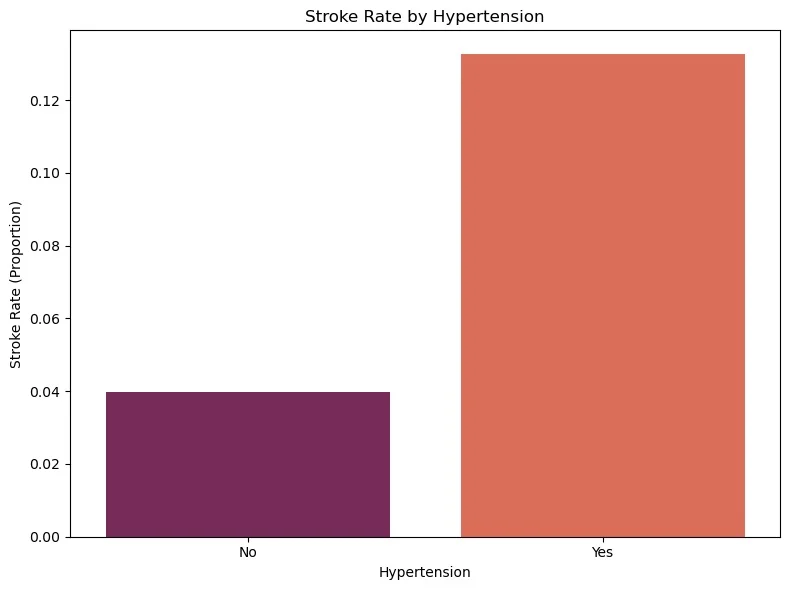

3.5 Stroke Rate by Hypertension

Hypertension dramatically increases stroke risk by over 3x:

- No hypertension: ~4% stroke rate

- With hypertension: ~13% stroke rate

This represents a 225% increase in stroke risk for people with hypertension.

This finding confirms hypertension as one of the most significant modifiable risk factors for stroke, making blood pressure control critical for stroke prevention.

The magnitude of this difference underscores why hypertension management is a cornerstone of cardiovascular health and stroke prevention strategies.

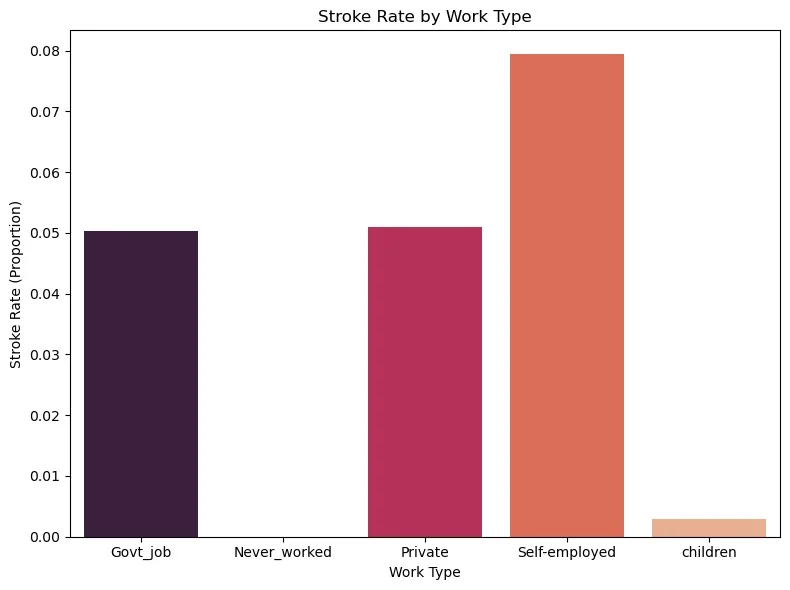

3.6 Stroke Rate by Work Type

Self-employed workers have the highest stroke risk at ~7.9% – significantly higher than all other work types.

Stroke rates by work type:

- Self-employed: ~7.9% (highest risk)

- Government job: ~5.0%

- Private sector: ~5.1%

- Never worked: ~0% (virtually no strokes)

- Children: ~0.3% (lowest, as expected)

Possible Explanations

Self-employed higher risk could be due to:

- Higher stress levels from business ownership responsibilities

- Irregular work schedules and work-life balance issues

- Less access to employer health benefits and regular healthcare

- Potentially older age group (more established in careers)

- Lifestyle factors related to entrepreneurial demands

Key Takeaway:

Self-employment appears to be associated with significantly elevated stroke risk, potentially highlighting the importance of stress management and healthcare access for entrepreneurs and business owners.

Never worked showing near-zero rate suggests this group may be predominantly younger/healthier individuals or those unable to work due to other factors.

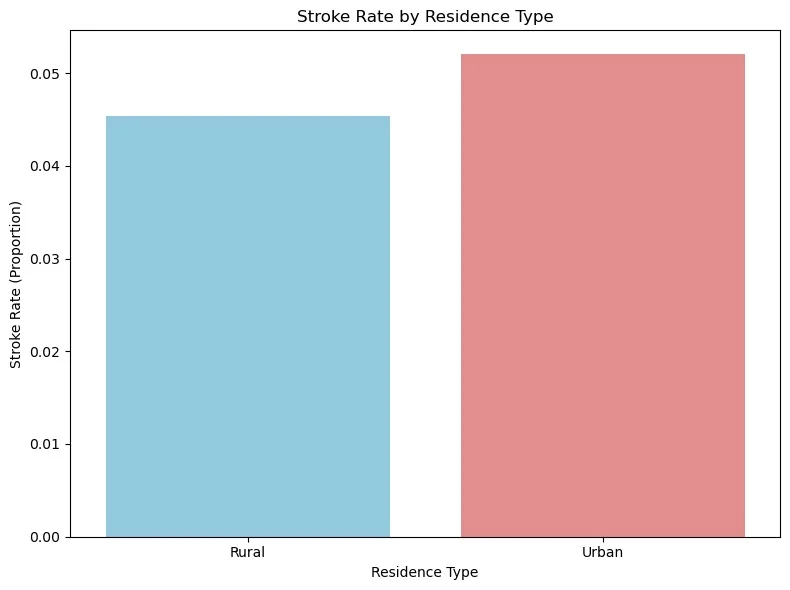

3.7 Stroke Rate by Residence Type

Urban residents have slightly higher stroke risk than rural residents:

- Urban: ~5.2% stroke rate

- Rural: ~4.5% stroke rate

This represents approximately 16% higher stroke risk for urban dwellers compared to rural residents.

Possible Explanations

Urban factors that may increase stroke risk:

- Higher stress levels from city living

- Air pollution and environmental toxins

- More sedentary lifestyles (less physical activity)

- Different dietary patterns (more processed foods)

- Higher cost of living stress

However, the difference is relatively small (~0.7 percentage points), suggesting that residence type is a minor risk factor compared to others like hypertension (which showed a 9 percentage point difference).

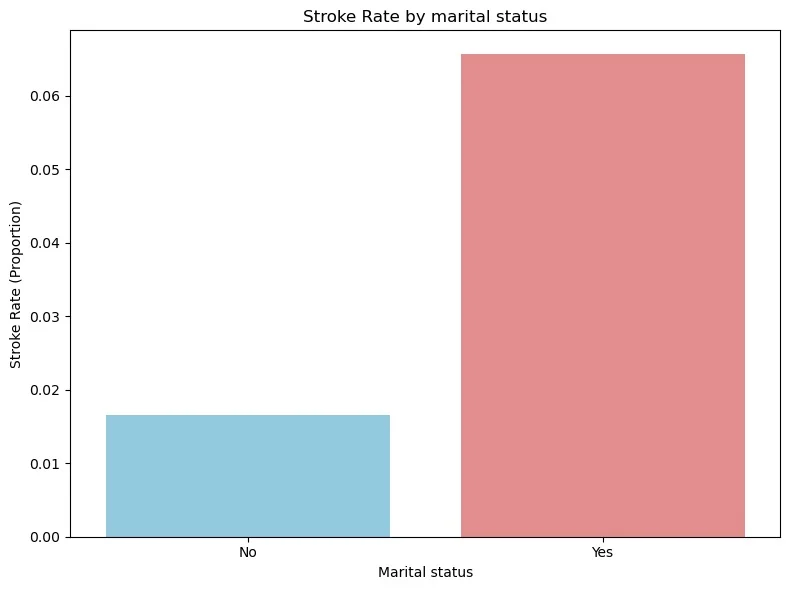

3.8 Stroke Rate by marital status

Never married (“No”): ~1.7% stroke rate

Ever married (“Yes”): ~6.5% stroke rate

Key Finding:

Ever married individuals have approximately 3.8 times higher stroke rate than never married individuals, That’s 280% higher relative risk (ever married vs never married).

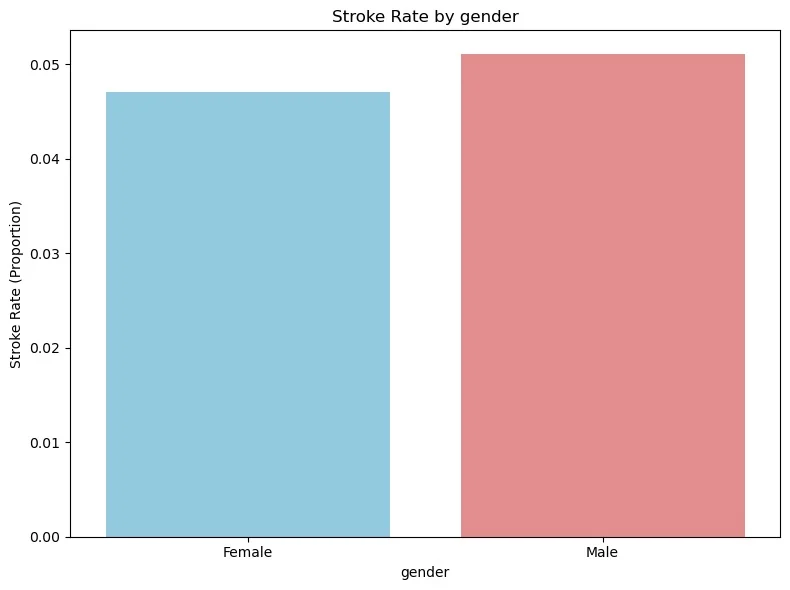

3.9 Stroke Rate by Gender

This stroke rate chart by gender provides a clear, standardized comparison and confirms the pattern observed in the raw counts.

Here’s the interpretation:

Stroke Rates by Gender:

- Females: Approximately 4.7% stroke rate

- Males: Approximately 5.1% stroke rate

Gender Difference

Males have about 0.4 percentage points higher stroke rate than females

This represents roughly an 8.5% higher relative risk for males compared to females

The difference is smaller than what my initial calculation suggested from the first chart

Significance

This chart confirms that males do have higher stroke rates than females in this dataset

Although both Male and Female rates remain relatively low (under 6%)

Comparative Insight: Interestingly, based on these charts, residence type (urban vs rural) appears to be a stronger predictor of stroke risk than gender in this particular dataset, which is somewhat unexpected given that gender is typically considered a more significant stroke risk factor.

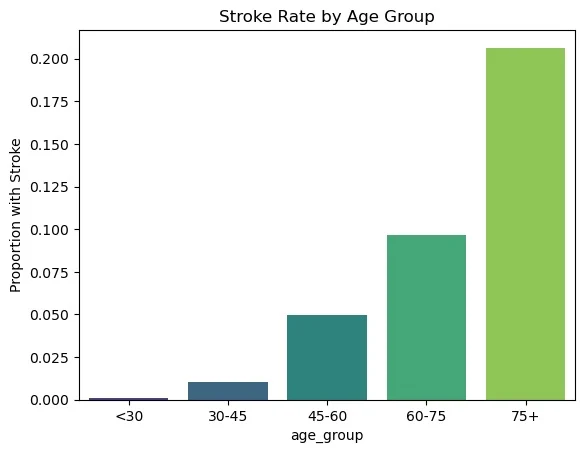

5.0 Stroke Rate by Age Group

Bar chart showing the proportion of stroke cases by age group

Stroke risk increases steadily with age.

Individuals aged 75+ have the highest stroke rate (~21%).

Those under 30 have a near-zero stroke rate.

The increase is gradual between 30 and 75, showing a strong age-related trend.

Older adults, especially those over 60, are at significantly higher risk of stroke. Age is a strong predictive factor in the dataset.

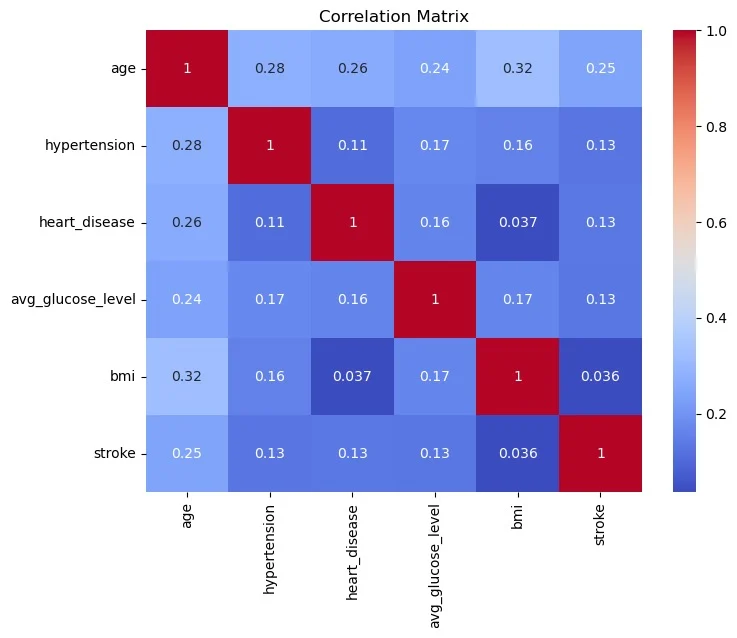

6.0 Correlation Analysis

Correlation heatmap showing relationships between numerical features and stroke.

Age has the strongest correlation with stroke (0.25), confirming the trend seen in the age group plot.

Hypertension, heart disease, avg_glucose_level show correlation of (~0.13), while BMI show the weakest correlation with stroke.(~0.036)

There is no multicollinearity as all inter-feature correlations are low (< 0.4).

7.0 Health and Data Science Relevant Questions This EDA Helps Answer

Does having heart disease increase the likelihood of stroke? Yes, the bar chart suggests a strong association between heart disease and stroke incidence.

What is the magnitude of difference in stroke occurrence between those with and without heart disease? Roughly 4x higher risk among those with heart disease.

Can heart disease be considered a potential risk factor in stroke prediction models? Yes, the strong correlation suggests that heart disease should be a key feature in any predictive modeling (e.g., logistic regression, decision trees).

Are there preventable cardiovascular conditions that can help reduce stroke burden? This analysis underscores the need to manage heart disease proactively to reduce stroke risk.

How do comorbidities influence stroke outcomes? → Heart disease, as a comorbidity, significantly influences stroke risk.

8.0 Data Preprocessing

The id variable was dropped, as it is not important to EDA or modeling.

The columns were converted to lowercase for uniformity.

Missing values were identified in the “bmi” variable and was handled by imputing with the median for stability.

Outliers were identified on the “avg_glucose_level” and “bmi” features using IQR-based method. extreme values were not winsorized as the values represent clinical significance.

Numerical columns were transformed with StandardScaler while categorical columns were transformed with OrdinalEncoder.

Date Imbalanced was handled with SMOTE.

9.0 Modelling and Evaluation

9.1 Model Selection

Four machine learning models were employed: Logistic Regression, Gaussian Naive Bayes (GaussianNB), RandomForest, and XGBoost.

Given the large disparity between non-stroke and stroke cases, weighted precision, recall, and F1 was used for fair performance measurement.

9.2 Model Evaluation

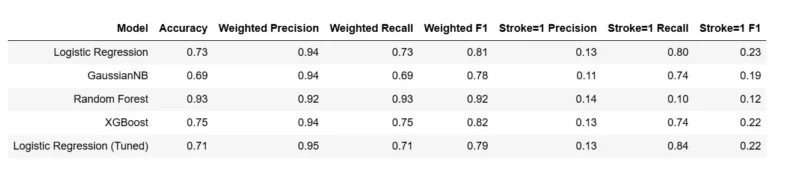

Logistic Regression (baseline and tuned) and XGBoost performed similarly, with overall accuracy ~71–75%. Their recall for stroke (class 1) was high (~74–84%), meaning they catch most stroke cases, but precision was very low (~0.13), so many false positives.

Naïve Bayes showed similar behavior, decent recall (74%) but poor precision (0.11).

Random Forest had excellent accuracy (93%) and precision for non-stroke cases, but terrible recall for stroke (0.10) — meaning it missed most stroke patients.

For this imbalanced dataset, recall for stroke (class 1) is the most important metric (better to flag a potential stroke than miss it).

Logistic Regression (tuned) gave the best trade-off with 84% recall.

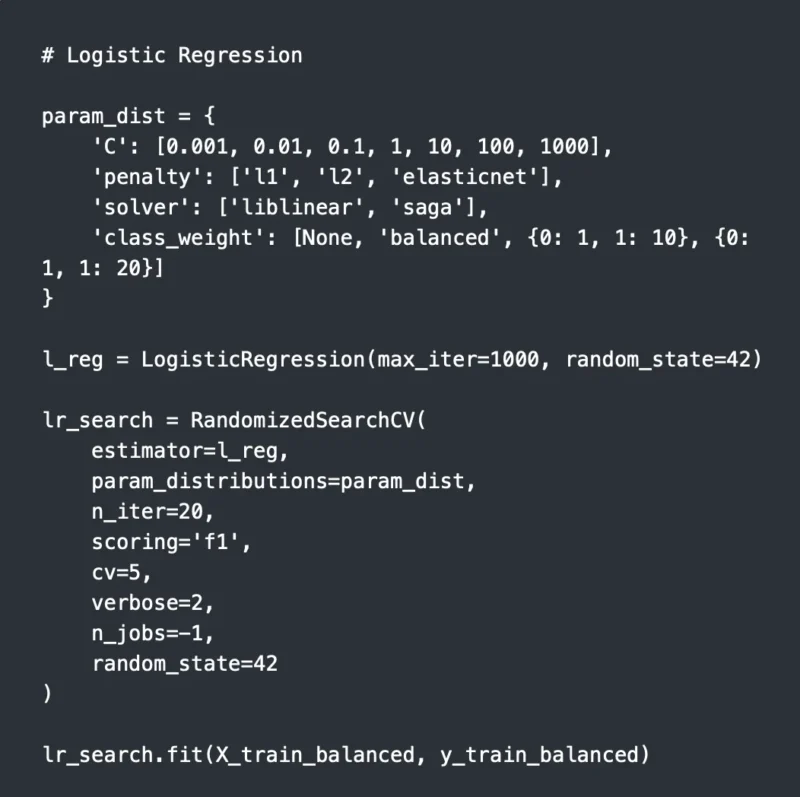

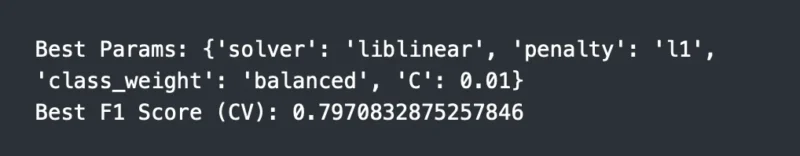

10.0 Hyperparameter Tuning and Cross-Validation

Hyperparameters were tuned using RandomizedSearchCV for Logistic Regression model to optimize the model performance as only the Logistic Regression show promising evaluation parameters, with a True Negative Rate 80%.

10.1 Tuning Parameters

10.2 Best Parameters and Cross-Validation Score

11.0 PERFORMANCE METRICS VISUALIZATION

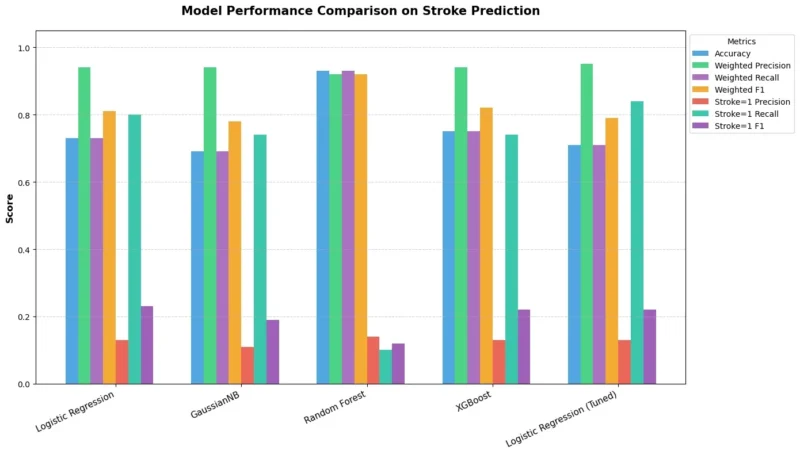

Random Forest achieved the highest accuracy at 93%, but it detected only 10% of actual stroke cases, making it clinically unreliable despite strong overall metrics.

Tuned Logistic Regression delivered the highest stroke recall at 84%, meaning it correctly identified most stroke cases at the cost of more false positives.

XGBoost and baseline Logistic Regression offered a more balanced trade-off, maintaining solid recall between 74% and 80% with stable overall performance.

In medical prediction, recall for the minority class matters more than accuracy, making Tuned Logistic Regression the most practical choice for stroke screening.

12.0 Model Evaluation

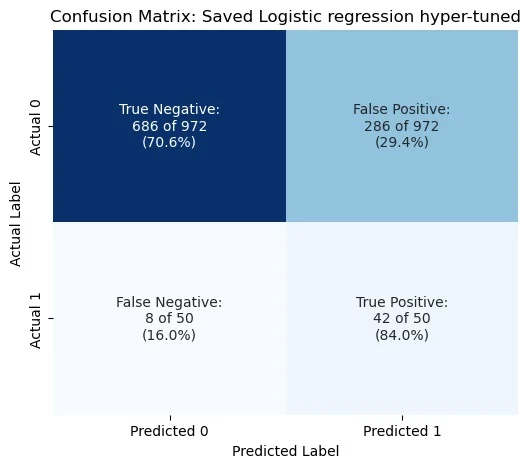

Confusion Matrix – Tuned Logistic Regression

The tuned Logistic Regression model correctly identified 84% of stroke cases, missing only 8 out of 50 patients.

This is a strong result for a screening-focused model where minimizing false negatives is critical.

The trade-off is an increase in false positives, with about 29% of non-stroke patients flagged as high risk.

In a healthcare context, this is an acceptable balance, it is better to investigate more patients than to miss a potential stroke case.

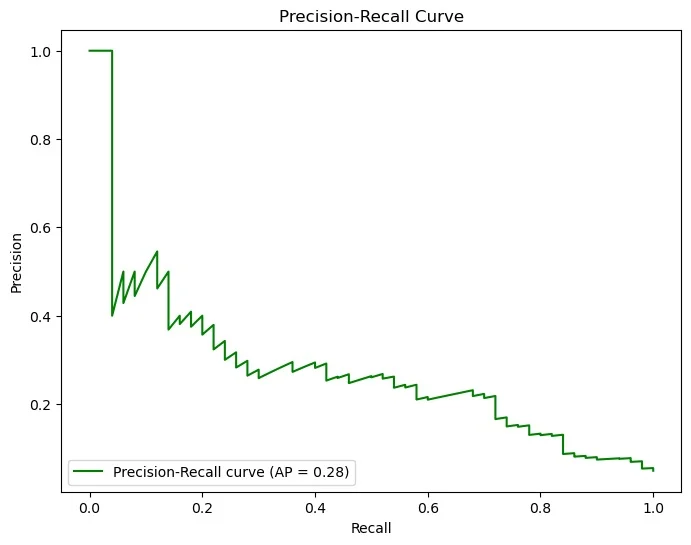

12.1 Precision-Recall Curve

The Precision–Recall curve highlights the challenge of predicting stroke in an imbalanced dataset.

While the model performs well at very low recall with high precision, precision drops sharply as recall increases.

With an Average Precision (AP) of 0.28, the model shows moderate ability to identify stroke cases but generates many false positives when trying to capture more of them.

This reinforces an important point: in rare-event prediction, strong recall often comes at the cost of precision.

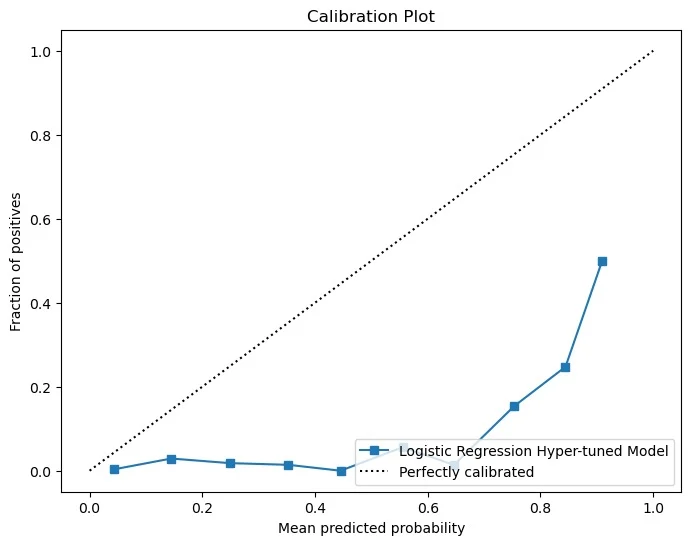

12.2 Calibration Plot and Brier score

The calibration plot shows that the model’s predicted probabilities do not align well with actual outcomes.

The curve sits far below the ideal diagonal line, indicating that when the model predicts high stroke risk, the true observed risk is much lower.

For example, predictions in the 0.8 to 0.9 range correspond to actual positive rates closer to 0.2 to 0.5.

This means the model may rank patients reasonably well, but its probability estimates are unreliable without recalibration, limiting its use in real clinical decision-making.

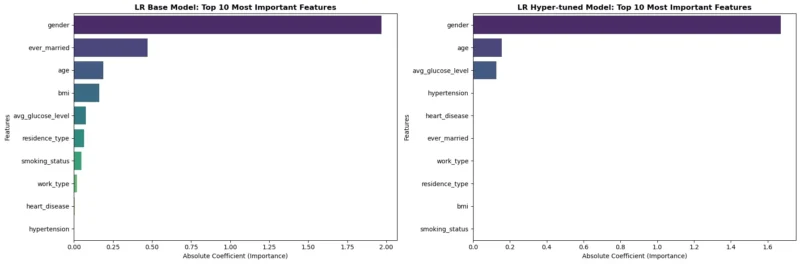

12.3 Feature importance for baseline Logistic regression model vs hyper-tuned model.

Both models show gender as the dominant predictor, but hyperparameter tuning reduced its coefficient from ~2.0 to ~1.65, indicating stronger regularization and less inflation.

In the tuned model, age and avg_glucose_level gain relative importance, while ever_married drops, suggesting better feature prioritization.

Overall coefficient shrinkage signals improved stability and reduced overfitting. However, the model remains heavily dependent on gender, which warrants bias and subgroup performance checks before deployment.

The fact that gender dominates in both versions suggests this is a data-level issue, not a modeling issue.

Further investigation should be carried out to ascertain whether gender is genuinely predictive for the target outcome or if there is bias in how the data.

13. Conclusion

Stroke prediction in this dataset is driven primarily by age, hypertension, heart disease, and glucose levels, with gender emerging as a dominant but potentially biased signal.

Tuned Logistic Regression provided the most practical screening model, prioritizing recall (84%) to minimize missed stroke cases, though at the cost of false positives and weak calibration.

The model ranks risk reasonably well but overestimates probabilities, meaning recalibration and fairness evaluation are necessary before clinical use.

The model is useful for risk stratification, not diagnosis, and real-world deployment would require bias auditing, probability calibration, and external validation.”

Stay updated with Hemostasis Today.

-

Feb 19, 2026, 14:42Luca Palombi: Real-Time Assessment of Venous Hemodynamics During Movement With DUS

-

Feb 19, 2026, 14:41Cansu Kose: Cardiovascular Disease in Women Requires More Than Male-Derived Frameworks

-

Feb 19, 2026, 14:28Sam K. Saha: A TIA Is A Warning Shot From A System On The Brink of Failure

-

Feb 19, 2026, 14:23Favour Felix-Ilemhenbhio: Parental Hypoxia Exposure Enhances Offspring Resilience to Stroke

-

Feb 19, 2026, 14:12Bhanu Hima Kumar Gadamsetti: 2018 vs 2026 AHA/ASA Acute Ischemic Stroke Guidelines

-

Feb 19, 2026, 14:10Gonçalo Ferraz Costa: Modern Thrombolytic Strategies and the Risk–Benefit Balance in Valve Thrombosis

-

Feb 19, 2026, 14:07Stéphanie Forté: New Insights on Stroke in Adults with Sickle Cell Disease

-

Feb 19, 2026, 14:00Hugh Kearney: Highlighting the Relevance of the Inflammasome in Multiple Sclerosis Pathophysiology

-

Feb 19, 2026, 13:56Ney Carter Borges: Low-Carbohydrate and Low-Fat Diet Quality and CHD Risk